This is the final part of my two part blog series on Summer 15’s new Custom Metadata feature. The first blog focused on defining the Custom Metadata Type (MDT for short) and reading and writing record data with the resulting SObject, via a combination of SOQL and Apex Metadata API. In this blog I will walk through the reminder of my proof of concept experience in trying out the new Custom Metadata feature of Summer’15. Specifically focusing on building a custom UI (no native UI support yet), AppExchange packaging and finally trying it out with Change Sets!

Custom UI’s for Custom Metadata Types

As described in the previous blog native DML in Apex against MDT SObject’s is not supported as yet. So effectively making a HTTP SOAP API (Metadata API) call to update MDT records made the execution flow in the controller a little different than normal, my solution to that may not be the only one but was simple and elegant enough for my use case case. As this was a POC i really just wanted to focus on the mechanics not the aesthetics, or user experience, however in the end i’m quite pleased with all.

Creating Records. The user first goes to the page and they see Create new…. in the drop down and the Developer Name field is enabled, hitting Save creates the record, refreshes the page and switches to edit mode. At this stage the Developer Name field is disabled and the drop down shows the recently created record selected by default.

Creating Records. The user first goes to the page and they see Create new…. in the drop down and the Developer Name field is enabled, hitting Save creates the record, refreshes the page and switches to edit mode. At this stage the Developer Name field is disabled and the drop down shows the recently created record selected by default.

Editing Records. In this mode the Developer Name (Object Name) field is disabled and Delete is enabled, users can either hit Save, Delete or pick another record from the drop down to Edit.

Editing Records. In this mode the Developer Name (Object Name) field is disabled and Delete is enabled, users can either hit Save, Delete or pick another record from the drop down to Edit.

- Deleting Records. By picking an existing record from the drop down, the Delete button is enabled. Once the user clicks it the record is deleted and the page refreshes (its a POC so no second chances with confirmation prompts!), then defaults back to selecting Create new… in the drop down.

So thats a simple walkthrough of the UI done, lets look at how it was built. In keeping with my desire to leverage the fact that MDT’s result in actual SObject’s i set about using some of the standard Visualforce components, to get the labels and some field validation via components like apex:inputField initially.

Currently the platform extends the fact that the SObject record itself cannot be natively inserted, to the fields themselves, and thus they are marked in the schema as read only. Which of course apex:inputField honours and consequently displays nothing. This is a shame, as i really wanted some UI validation for free. So as you can see from the sample code below i switched to using apex:inputText, apex:inputCheckbox etc. which do in fact still help me reuse the Custom Field labels, since they are placed within an apex:pageBlockSection.

<apex:page controller="ManageLookupRollupSummariesController" showHeader="true"

sidebar="true" action="{!init}">

<apex:form>

<apex:sectionHeader title="Manage Lookup Rollup Summaries"/>

<apex:outputLabel value="Select Lookup Rollup Summary:" />

<apex:selectList value="{!SelectedLookup}" size="1">

<apex:actionSupport event="onchange" action="{!load}" reRender="rollupDetailView"/>

<apex:selectOptions value="{!Lookups}"/>

</apex:selectList>

<p/>

<apex:pageMessages/>

<apex:pageBlock mode="edit" id="rollupDetailView">

<apex:pageBlockButtons>

<apex:commandButton value="Save" action="{!save}"/>

<apex:commandButton value="Delete" action="{!deleteX}" disabled="{!LookupRollupSummary.Id==null}"/>

</apex:pageBlockButtons>

<apex:pageBlockSection title="Information" columns="2">

<apex:inputText value="{!LookupRollupSummary.Label}"/>

<apex:inputText value="{!LookupRollupSummary.DeveloperName}" disabled="{!LookupRollupSummary.Id!=null}"/>

</apex:pageBlockSection>

<apex:pageBlockSection title="Lookup Relationship" columns="2">

<apex:inputText value="{!LookupRollupSummary.ParentObject__c}"/>

<apex:inputText value="{!LookupRollupSummary.RelationshipField__c}"/>

<apex:inputText value="{!LookupRollupSummary.ChildObject__c}"/>

<apex:inputText value="{!LookupRollupSummary.RelationshipCriteria__c}"/>

<apex:inputText value="{!LookupRollupSummary.RelationshipCriteriaFields__c}"/>

</apex:pageBlockSection>

<!-- Cut down example, see Gist link for full code -->

</apex:pageBlock>

</apex:form>

</apex:page>

You will also see thats its a totally custom controller, no standard controller support currently yet either. Other than that its fairly basic affair to use Visualforce with MDT SObject’s once the custom controller exposes an instance of one, in my case via the LookupRollupSummary property. The full source code for the controller can be found here, lets walk through the code relating to each of its life cycles. Firstly page construction…

public with sharing class ManageLookupRollupSummariesController {

public LookupRollupSummary__mdt LookupRollupSummary {get;set;}

public String selectedLookup {get;set;}

public ManageLookupRollupSummariesController() {

LookupRollupSummary = new LookupRollupSummary__mdt();

}

public List<SelectOption> getLookups() {

// List current rollup custom metadata configs

List<SelectOption> options = new List<SelectOption>();

options.add(new SelectOption('[new]','Create new...'));

for(LookupRollupSummary__mdt rollup :

[select DeveloperName, Label from LookupRollupSummary__mdt order by Label])

options.add(new SelectOption(rollup.DeveloperName,rollup.Label));

return options;

}

public PageReference init() {

// URL parameter?

selectedLookup = ApexPages.currentPage().getParameters().get('developerName');

if(selectedLookup!=null)

{

LookupRollupSummary =

[select

Id, Label, Language, MasterLabel, NamespacePrefix, DeveloperName, QualifiedApiName,

ParentObject__c, RelationshipField__c, ChildObject__c, RelationshipCriteria__c,

RelationshipCriteriaFields__c, FieldToAggregate__c, FieldToOrderBy__c,

Active__c, CalculationMode__c, AggregateOperation__c, CalculationSharingMode__c,

AggregateResultField__c, ConcatenateDelimiter__c, CalculateJobId__c, Description__c

from LookupRollupSummary__mdt

where DeveloperName = :selectedLookup];

}

return null;

}

Note: Here the DeveloperName is used, since when redirecting after record creation I don’t yet have the ID (more a reflection of the CustomMetadataService being incomplete at this stage) so this is a good alternative. Also note the SOQL code above is not recommended for production use, you should always use SOQL arrays and handle the scenario where the record does not exist elegantly.

In the controller constructor the MDT SObject type is constructed, as the default behaviour is to create a new record. After that the init method fires (due to the action binding on the apex:page tag), this method determines if the URL carries a reference to a specific MDT record or not, and loads it via SOQL if so. You can also see in the getLookups method SOQL being used to query the existing records and thus populate the dropdown.

As the user selects items from the dropdown, the page is refreshed via the load method with a custom URL carrying the selected record and the init method basically loads it into the LookupRollupSummary property and results in its display on the page.

public PageReference load() {

// Reload the page

PageReference newPage = Page.managelookuprollupsummaries;

newPage.setRedirect(true);

if(selectedLookup != '[new]')

newPage.getParameters().put('developerName', selectedLookup);

return newPage;

}

Finally we see the heart of the controller the save and deleteX methods.

public PageReference save() {

try {

// Insert / Update the rollup custom metadata

if(LookupRollupSummary.Id==null) {

CustomMetadataService.createMetadata(new List<SObject> { LookupRollupSummary });

} else {

CustomMetadataService.updateMetadata(new List<SObject> { LookupRollupSummary });

}

// Reload this page (and thus the rollup list in a new request, metadata changes are not visible until this request ends)

PageReference newPage = Page.managelookuprollupsummaries;

newPage.setRedirect(true);

newPage.getParameters().put('developerName', LookupRollupSummary.DeveloperName);

return newPage;

} catch (Exception e) {

ApexPages.addMessages(e);

}

return null;

}

public PageReference deleteX() {

try {

// Delete the rollup custom metadata

CustomMetadataService.deleteMetadata(

LookupRollupSummary.getSObjectType(), new List<String> { LookupRollupSummary.DeveloperName });

// Reload this page (and thus the rollup list in a new request, metadata changes are not visible until this request ends)

PageReference newPage = Page.managelookuprollupsummaries;

newPage.setRedirect(true);

return newPage;

} catch (Exception e) {

ApexPages.addMessages(e);

}

return null;

}

These methods leverage those i created on the CustomMetadataService class i introduced in my previous blog to do the bulk of the work, so in keeping with Separation of Concerns keep the controller doing what its supposed to be doing. Whats important here is that when your effectively making a HTTP outbound call to insert, update or delete the records, the the context your code is currently running in will not see the record until it has ended. Meaning you cannot simply issue a new SOQL query to refresh the drop down list controller state and have it refreshed on the page. My solution to this, and admittedly its a heavy handed one, is to return my page reference with the developerName URL parameter set and setRedirect(true) which will cause a client side redirect and thus the page initialisation to fire again in a new request which can see the insert or updated record information.

Populating MDT SObject Fields in Apex

As i mentioned above, MDT SObject fields are marked as read only, while Visualforce bindings appear to ignore this. Apex code is not immune to this, and the following code fails to save with…

Field is not writeable: LookupRollupSummary__mdt.DeveloperName

CustomMetadataService.createMetadata(

new List<LookupRollupSummary__mdt> {

new LookupRollupSummary__mdt(

DeveloperName = 'Test',

MasterLabel = 'Test Label',

Active__c = true,

ChildObject__c = 'Opportunity',

ParentObject__c = 'Account',

RelationshipField__c = 'AccoundId')

});

Not to be deterred from using compiler checked SObject Custom Field references i have updated the CustomMetadataService to take a map of SObjectField tokens and values, which looks like this.

CustomMetadataService.createMetadata(

// Custom Metadata Type

LookupRollupSummary__mdt.SObjectType,

// List of records

new List<Map<SObjectField, Object>> {

// Record

new Map<SObjectField, Object> {

LookupRollupSummary__mdt.DeveloperName => 'Test',

LookupRollupSummary__mdt.MasterLabel => 'Test Label',

LookupRollupSummary__mdt.Active__c => true,

LookupRollupSummary__mdt.ChildObject__c => 'Opportunity',

LookupRollupSummary__mdt.ParentObject__c => 'Account',

LookupRollupSummary__mdt.RelationshipField__c => 'AccountId'

}

});

Its not ideal, but does allow you to get as much re-use out of the MDT SObject type as possible until Salesforce execute more of their roadmap and open up native read/write access to this object.

Packaging Custom Metadata Types and Records!

This is a thankfully UI driven process, much as you would any other component. Simply go to your Package Definition and add your Custom Metadata Type, if it has not already been brought in already through your SObject type references in your Visualforce or Apex code. Once added is shown as a Custom Object in the package detail page.

This is a thankfully UI driven process, much as you would any other component. Simply go to your Package Definition and add your Custom Metadata Type, if it has not already been brought in already through your SObject type references in your Visualforce or Apex code. Once added is shown as a Custom Object in the package detail page.

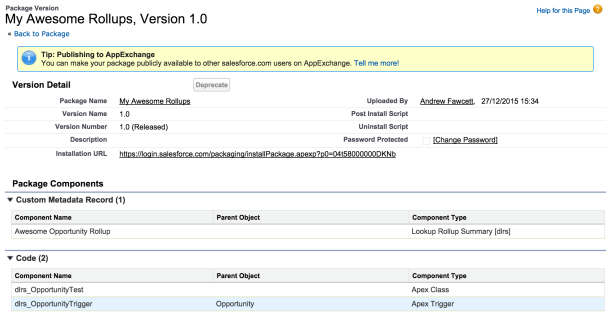

You can also if you desire package any MDT records you have created as well, which is actually very cool! No longer do you have to write a post install Apex Script to populate your own default configurations (for example), you can just package them as you would any other peace of Metadata. For example here is a screenshot showing the packaging of a default rollup i created in the packaging org and it showing up on the Add to Package screen. Once installed this will be available to your packaged Apex code to query.

This then shows up as a Custom Metadata Record in your Packaged Components summary!

Finally note that you can have protected Custom Metadata types (much like Custom Settings), where only your packaged Apex code can read Custom Metadata records you have packaged and subscribers of your package cannot add new records, here you would want to use the aforementioned ability to package your MDT records. The Metadata API cannot access protected MDT’s that have been installed via a managed package.

Trying out Change Sets

I created myself test Enterprise Edition and Sandbox orgs, installed my POC package in both and eagerly got started trying out Change Sets with my new Custom Metadata type! Which i admit is the first time i’ve actually used Change Sets! #ISVDeveloper. The use case i wanted to experience here is simply as follows…

- User defines or updates some custom configuration in Sandbox and tests it

- User wants to promote that change to Production as simply as possible

In a world before Custom Metadata, platform on platform tools such as Declarative Lookup Rollup Summary, approached the above use case by either expecting the user to manually re-enter configuration, or doing a CSV export of configuration data, downloading to a desktop and re-upload in the Production org. This dance is simply a thing of the past in the world of Custom Metadata! Here is what i did to try this out for the first time!

- Setup my custom metadata records via my Custom UI in the Sandbox

- Created a Sandbox Change Set with my records in and uploaded it

- Deployed my Change Set in the Enterprise org and job done!

Conclusion and Next Steps for Declarative Rollup Summary Tool?

I started this as means to get a better practical understanding of this great new platform feature for Summer’15 and i definitely feel i’ve got that. While its a little bitter sweet at present, due to its slightly raw barry to entry getting into it, it’s actually a pretty straight forward native experience once you get past the definition stage. Once Salesforce enable native UI for this area i’m sure it will take off even more! My only other wish is native Apex support for writing to them, but i totally understand the larger elephant in the corner on this one, being native Metadata API support (up vote idea here).

So do i feel i can migrate my Declarative Lookup Rollup Summary (DLRS) tool to Custom Metadata now? The answer is probably yes, but would i ditch the current Custom Object approach in doing so? Well no. I think its prudent to offer both ways of defining for now and perhaps offer the Custom Metadata approach as a pilot feature to users of this tool, leveraging an updated version of the pilot UI that already exists as a Custom UI. For sure i can see how by leveraging the tools use of Apex Enterprise Patterns and separation of concerns, i can start to weave dual mode support in without to much disruption. Its certainly not a single weekend job, but one that sounds fun, i’ll perhaps map out the tasks in a GitHub enhancement and see if my fellow contributors would like to join in!

Other Great Custom Metadata Resources!

In addition to my first blog in this series, here are some resources i found while doing my research, please let me know if there are others, and i’ll be happy to add them to the list!

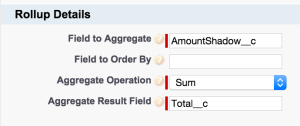

Either way i knew i had to have some kind of physical field other than a Formula field on the Quote Line Item object to notify the rollup tool of a change that would trigger a recalculate of the Quote > Total. I called this field Amount (Shadow) in this case, i also left it off my layout.

Either way i knew i had to have some kind of physical field other than a Formula field on the Quote Line Item object to notify the rollup tool of a change that would trigger a recalculate of the Quote > Total. I called this field Amount (Shadow) in this case, i also left it off my layout.

Wow, this is a BIG thing and opens up a lot of tooling and greater compliance support for changes around orgs. Prior to this, folks where so keen to get hold of this information programatically they would resort to

Wow, this is a BIG thing and opens up a lot of tooling and greater compliance support for changes around orgs. Prior to this, folks where so keen to get hold of this information programatically they would resort to

Creating Records. The user first goes to the page and they see Create new…. in the drop down and the Developer Name field is enabled, hitting Save creates the record, refreshes the page and switches to edit mode. At this stage the Developer Name field is disabled and the drop down shows the recently created record selected by default.

Creating Records. The user first goes to the page and they see Create new…. in the drop down and the Developer Name field is enabled, hitting Save creates the record, refreshes the page and switches to edit mode. At this stage the Developer Name field is disabled and the drop down shows the recently created record selected by default. Editing Records. In this mode the Developer Name (Object Name) field is disabled and Delete is enabled, users can either hit Save, Delete or pick another record from the drop down to Edit.

Editing Records. In this mode the Developer Name (Object Name) field is disabled and Delete is enabled, users can either hit Save, Delete or pick another record from the drop down to Edit. This is a thankfully UI driven process, much as you would any other component. Simply go to your Package Definition and add your Custom Metadata Type, if it has not already been brought in already through your SObject type references in your Visualforce or Apex code. Once added is shown as a Custom Object in the package detail page.

This is a thankfully UI driven process, much as you would any other component. Simply go to your Package Definition and add your Custom Metadata Type, if it has not already been brought in already through your SObject type references in your Visualforce or Apex code. Once added is shown as a Custom Object in the package detail page.

I was pleased and proud to see Salesforce themselves leveraging the

I was pleased and proud to see Salesforce themselves leveraging the

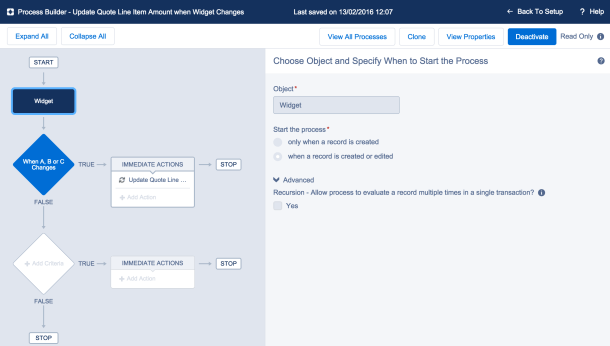

I have just spent a very enjoyable Saturday morning in my Spring’15 Preview org with a special build of the LittleBits Connector. That leverages the ability for Process Builder to callout to specially annotated Apex code that in turn calls out to the LittleBits Cloud API.

I have just spent a very enjoyable Saturday morning in my Spring’15 Preview org with a special build of the LittleBits Connector. That leverages the ability for Process Builder to callout to specially annotated Apex code that in turn calls out to the LittleBits Cloud API.