I have been working with Agentforce for a while, and as is typically the case, I find myself drawn to platform features that allow extensibility, and then my mind seems to spin as to how to extract the maximum value from them! This blog explores the Rich Text (a subset of HTML) output option to give your agents’ responses a bit more flair, readability, and even some graphical capability.

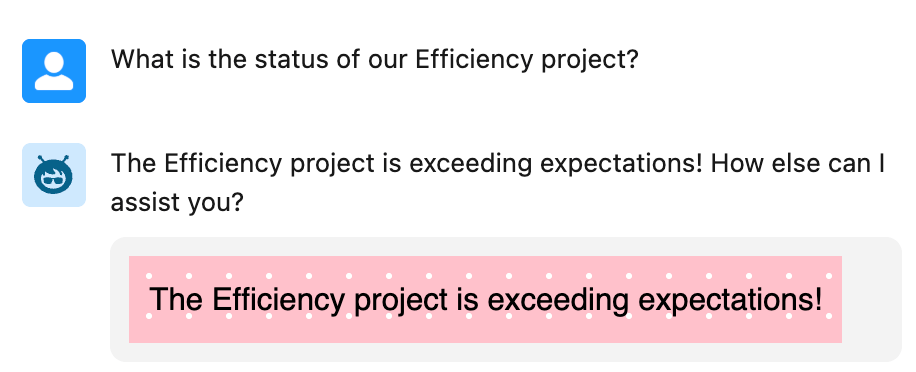

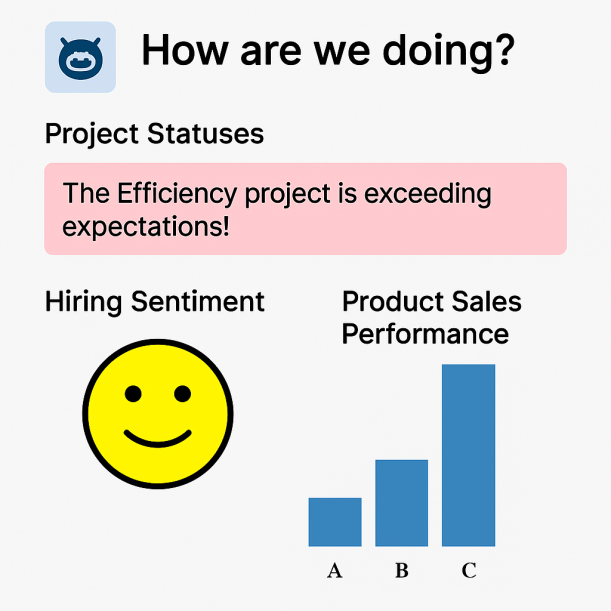

Agentforce Actions typically return data values that the AI massages into human-readable responses such as, “Project sentiment is good”, “Product sales update: 50% for A, 25% for B, and 25% for C”, “Project performance is exceeding expectations; well done!”. While these are informative, they could be more eye-catching and, in some cases, benefit from more visual alternatives. While we are now getting the ability to use LWC’s in Agentforce for the ultimate control over both rendering and input, Rich Text is a middle-ground approach and does not always require code. All be it perhaps a bit of HTML or SVG knowledge and/or AI assistance is needed – you can achieve results like this in Agentforce chat…

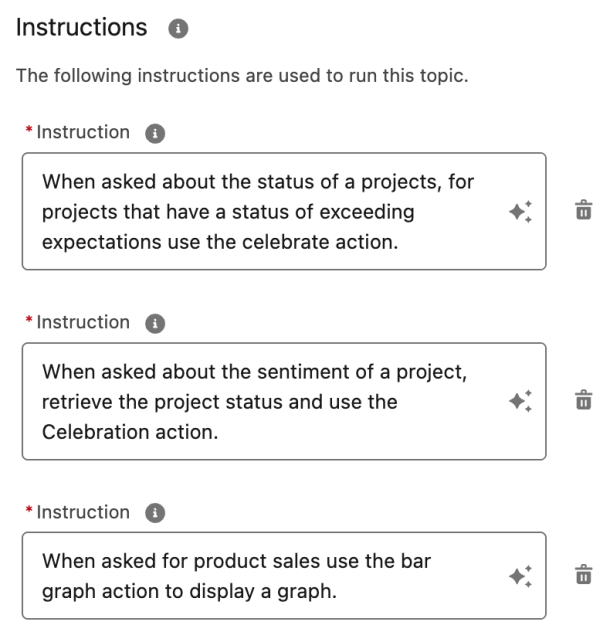

Lets start with a Flow example, as it also supports Rich Text through its Text Template feature. Here is an example of a Flow action that can be added to a topic with instructions to tell the agent to use it when presenting good news to the user, perhaps in collaboration with a project status query action.

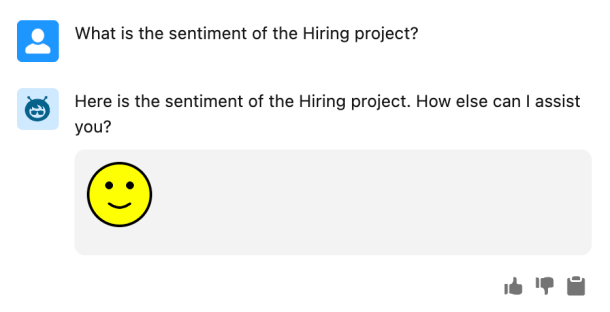

In this next example a Flow conditionally assigns and output from multiple Text Templates based on an input of negative, neutral or positive – perhaps used in conjunction with a project sentiment query action.

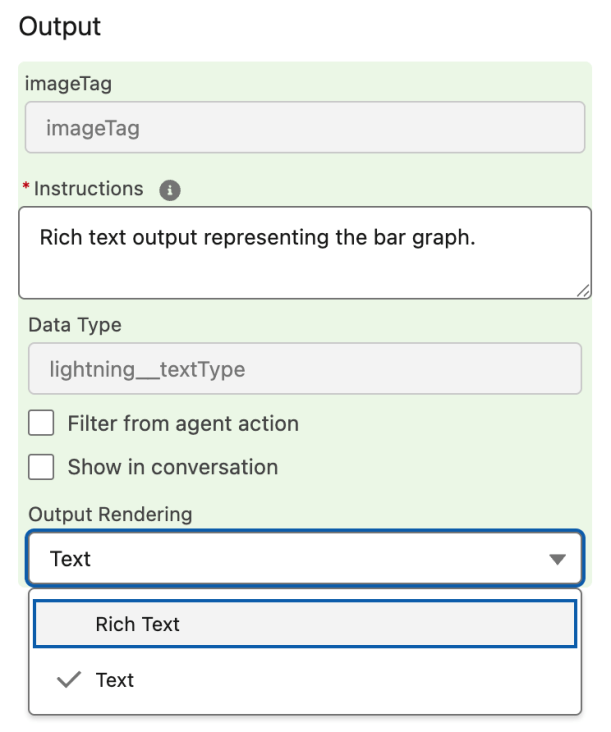

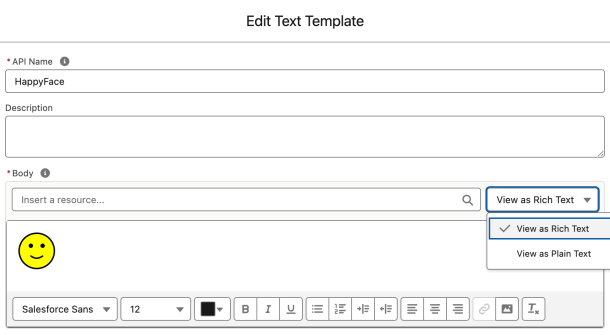

The Edit Text Template dialog allows you to create Rich Text with the toolbar or enter HTML directly. Its using this option we can enter our smile svg shown below:

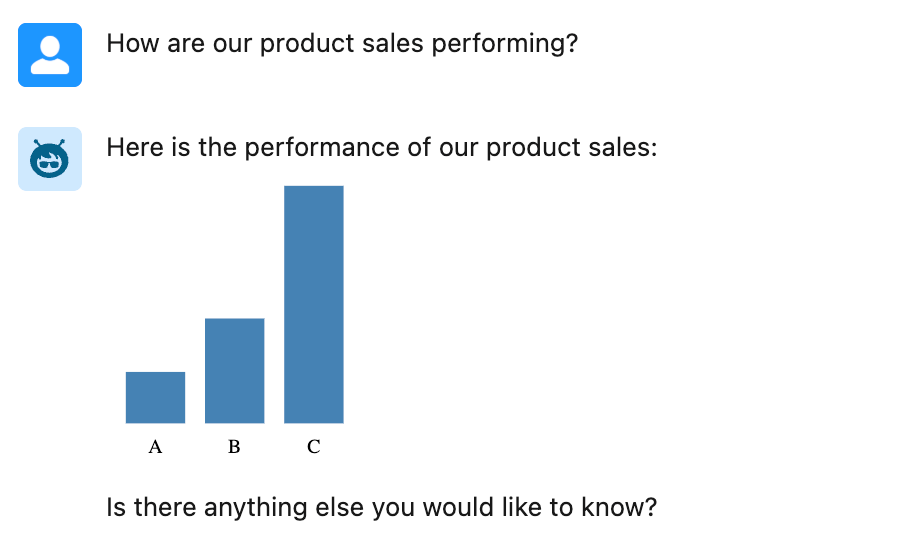

<svg xmlns='http://www.w3.org/2000/svg' width='50' height='50'><circle cx='25' cy='25' r='24' fill='yellow' stroke='black' stroke-width='2'/><circle cx='17' cy='18' r='3' fill='black'/><circle cx='33' cy='18' r='3' fill='black'/><path d='M17 32 Q25 38 33 32' stroke='black' stroke-width='2' fill='none' stroke-linecap='round'/></svg>For a more dynamic approach we can break out into Apex and use SVG once again to generate graphs, perhaps in collaboration with an action that retrieves product sales data.

The full Apex is stored in a Gist here but in essence its doing this:

@InvocableMethod(

label='Generate Bar Chart'

description='Creates a bar chart SVG as an embedded <img> tag.')

public static List<ChartOutput> generateBarChart(List<ChartInput> inputList) {

...

String svg = buildSvg(dataMap);

String imgTag = '<img src="data:image/svg+xml,' + svg + '"/>';

return new List<ChartOutput>{ new ChartOutput(imgTag) };

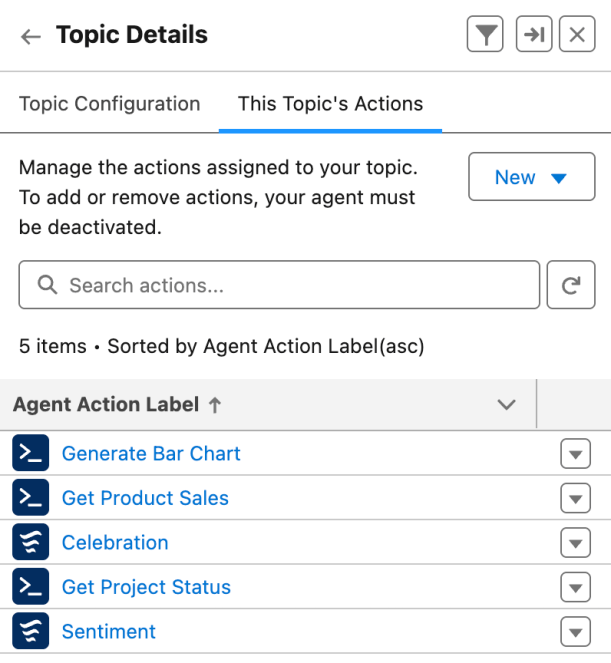

}When building the above agent, I was reminded of a best practice shared by Salesforce MVP, Robert Sösemann recently, which is to keep your actions small enough to be reused by the AI. This means that I could have created an action solely for product sales that generated the graph. Instead, I was able to give the topic instructions to use the graph action when it detects data that fits its inputs. In this way, other actions can generate data, and the AI can now use the graph rendering independently. As you can see below, there is a separation of concerns between actions that retrieve data and those that format it (effectively those that render Rich Text). By crafting the correct instructions, you can teach the AI to effectively chain actions together.

You can also use Prompt Builder based actions to generate HTML as well. This is something that the amazing Alba Rivas covered very well in this video already. I also captured the other SVG examples used in this Gist here. A word on security here, SVG can contain code, so please make sure to only use SVG content you create or have from a trusted source – of note is that using SVG embedded in an img tag code is blocked by the browser, <img src="data:image/svg+xml,<svg>....</svg>"/>.

Whats next? Well I am keen to explore the upcoming ability to use LWC’s in Agentforce. This allows for control of how you request input from the user and how the results of actions are rendered. Potentially enabling things like file uploads, live status updates and more! Meanwhile check this out from Avi Rai.

Meanwhile, enjoy!

I’m proud to announce the third edition of my book has now been released. Back in March this year I took the plunge start updates to many key areas and add two brand new chapters. Between the 2 years and 8 months since the last edition there has been several platform releases and an increasing number of new features and innovations that made this the biggest update ever! This edition also embraces the platforms rebranding to Lightning, hence the book is now entitled Salesforce Lightning Platform Enterprise Architecture.

I’m proud to announce the third edition of my book has now been released. Back in March this year I took the plunge start updates to many key areas and add two brand new chapters. Between the 2 years and 8 months since the last edition there has been several platform releases and an increasing number of new features and innovations that made this the biggest update ever! This edition also embraces the platforms rebranding to Lightning, hence the book is now entitled Salesforce Lightning Platform Enterprise Architecture. With

With

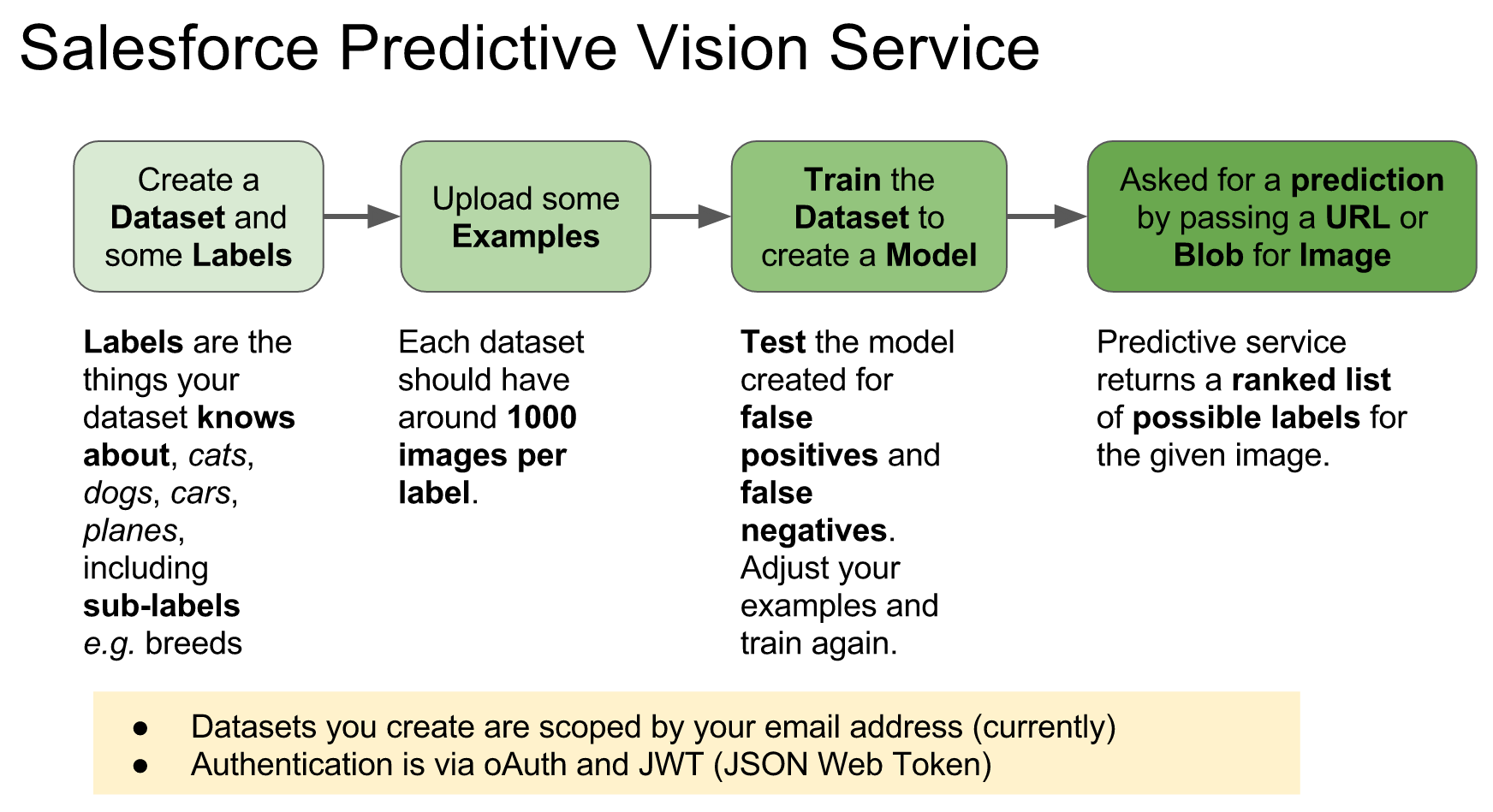

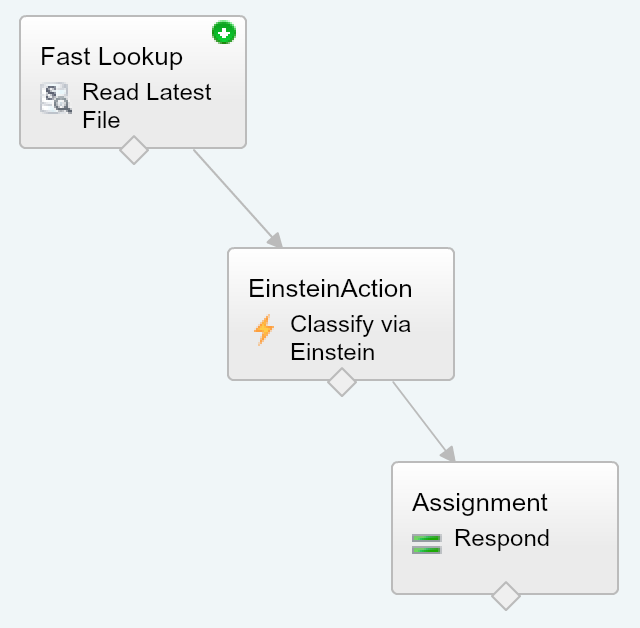

The service introduces a few new terms to get your head round. Firstly a dataset is a named container for the types of images (labels) you want to recognise. The

The service introduces a few new terms to get your head round. Firstly a dataset is a named container for the types of images (labels) you want to recognise. The