Salesforce platform has long since had a place in my heart for the empowerment it brings to people who like to create. As a developer I love to create and feel the satisfaction in helping others. With Salesforce I can create without code as well. Now as much as I love the declarative side of building I do love to code!

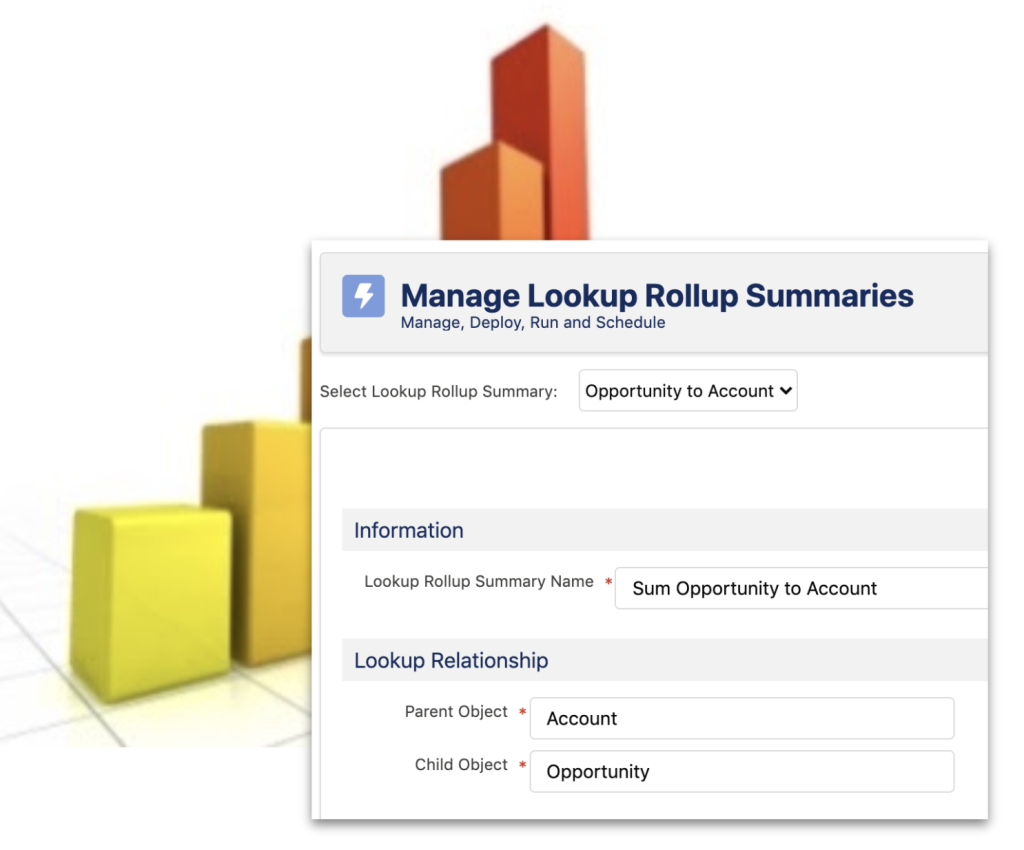

In 2013 I found a way to magnify this feeling by closing the gap between coding and helping other creators, also known as Admin’s. This blog talks about a big next step in the future of the Declarative Lookup Rollup Summaries Tool or “Dolores” (short for DLRS) as the community refers to it – and how you can help.

SUPPORT: Declarative Lookup Rollup Summary (DLRS) Tool: This tool is open source and is supported by the community in a volunteer capacity. Kindly please post your questions to the Trailblazer Community Group. Thank you!

Memory Lane…

DLRS was somewhat born out of a solution looking for a problem. Around the same time in 2013 I was playing around with the Metadata API, automating Setup aspects via Apex and building on the platform. In doing so I was discovering some of its declarative gaps. One such gap was the inability to define rollups that are not related to master detail relationships – resolving this within the platform itself required Apex and still does today. However, every now and again I tend to somehow see connections between things that are not always obvious – and maybe a little crazy – and so I wondered – what if an Admin tool generated and managed code?

I am also a frugal developer who likes to focus on the job at hand and thus look to open source when I can. After a quick internet search I found Abhinav Gupta’s open source Apex library LREngine, also known as Salesforce Lookup Rollup Summaries. This still required a developer to write an Apex Trigger, and it also lacked a way to configure it without a developer. And so the neurons fired and “Declarative” Lookup Rollup Summaries tool was born! Over time LREngine received a number of enhancements via DLRS requirements and optimizations. The ways in which rollups could be invoked and configured was expanded around the library. The rest, as they say, is history!

DLRS Stats and a Realization…

There have been 39 releases to date, and I am proud to have had 18 GitHub contributors supporting the tool with amazing features, fixes and improvements. Along with an amazing Chatter Group on the Trailblazer Community with over 1140 members. DLRS has been installed in tens of thousands of orgs at this point. When I started the Chatter group I tried my best to answer each and every question. What I started to find is the community began to help the community, superstars like Jon LaRosa, Dan Donin and Jim Bartek and many others are so engaged and supportive its wonderful to see.

The reality though as over the past years my time and focus on the tool has not met the increasing and ongoing demand for the tool. Between holiday time coding I don’t feel it’s quite enough and the community and users of this tool deserve more. And so with mixed feelings I sent the following tweet – well actually my wife pressed the send button for me – she knows how much I love this tool and generally knows what’s best – so here we are!

DLRS needs a team, and you can help

TL:DR If you’d like to join a team of community members, helping to ensure the future of DLRS, email sfdo-opensource@salesforce.com for more information on how you can help.

It’s very important to me for it to remain open source, free to the community and get the love and attention it deserves. There are a number of duties managing it that cause me to become a bit of a bottleneck, such as packaging, and also some things that are only in my head around testing and the code base. So I originally reached out for a lead developer to help out.

I had some great responses from the Twitter post, but it was the suggestion to have the project managed as a community team as part of the Salesforce Open Source Commons Program which showed me another path. One that could ensure DLRS continues to thrive and expand well into the future.

The OSC program provides a framework that wraps a great process and set of tools around open source projects, in partnership between community leaders and Salesforce. Learning about the OSC program made me realize that to ensure DLRS would truly be a sustainable and trusted package for it’s tens of thousands of users, more than a new lead developer would be needed. Maintaining a package like DLRS should be done by a team of volunteers, including those who can help others with support questions, those that want to help with docs, those that like to manage feature backlogs and more automated release processes – the list goes on. There are already eight open source projects involved with the program, ranging from managed packages to help Naval Volunteers (Ombudsman) with case management, to product support help videos, to DEI ecosystem improvement. The program hosts multiple training opportunities, and very popular Community Sprint events (like hackathons) throughout the year, to bring exposure and new volunteers to projects.

Photo caption: Open Source Community Sprint participants, Philadelphia, October 2019

I have met with Cori O’Brien, Senior Manager of the Open Source Commons, who is very keen to share the benefits of the program further to the DLRS community and especially those that are keen to help in whatever way they can. If you would like to help or just learn more about the program, please drop an email to sfdo-opensource@salesforce.com and we will add you to a kick-off meeting to be held mid August.

Meanwhile – thank you all for the support you have given this tool – its been truly something I will be proud of for the rest of my life. I am very excited about this next step and rest assured I will be around to continue to contribute with the project – but most importantly so will many others!

SUPPORT: Declarative Lookup Rollup Summary (DLRS) Tool: This tool is open source and is supported by the community in a volunteer capacity. Kindly please post your questions to the Trailblazer Community Group. Thank you!

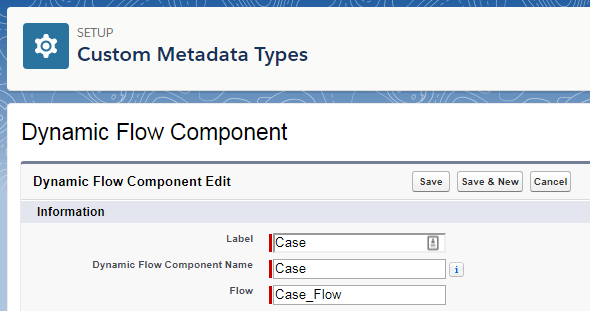

The Dynamic Flow Component (DFC) allows you to further extend your use of auto launch and screen

The Dynamic Flow Component (DFC) allows you to further extend your use of auto launch and screen

Its been nearly 9 years since i created my first Salesforce developer account. Back then I was leading a group of architects building on premise enterprise applications with Java J2EE and Microsoft .Net. It was fair to say my decision to refocus my career not only in building the

Its been nearly 9 years since i created my first Salesforce developer account. Back then I was leading a group of architects building on premise enterprise applications with Java J2EE and Microsoft .Net. It was fair to say my decision to refocus my career not only in building the

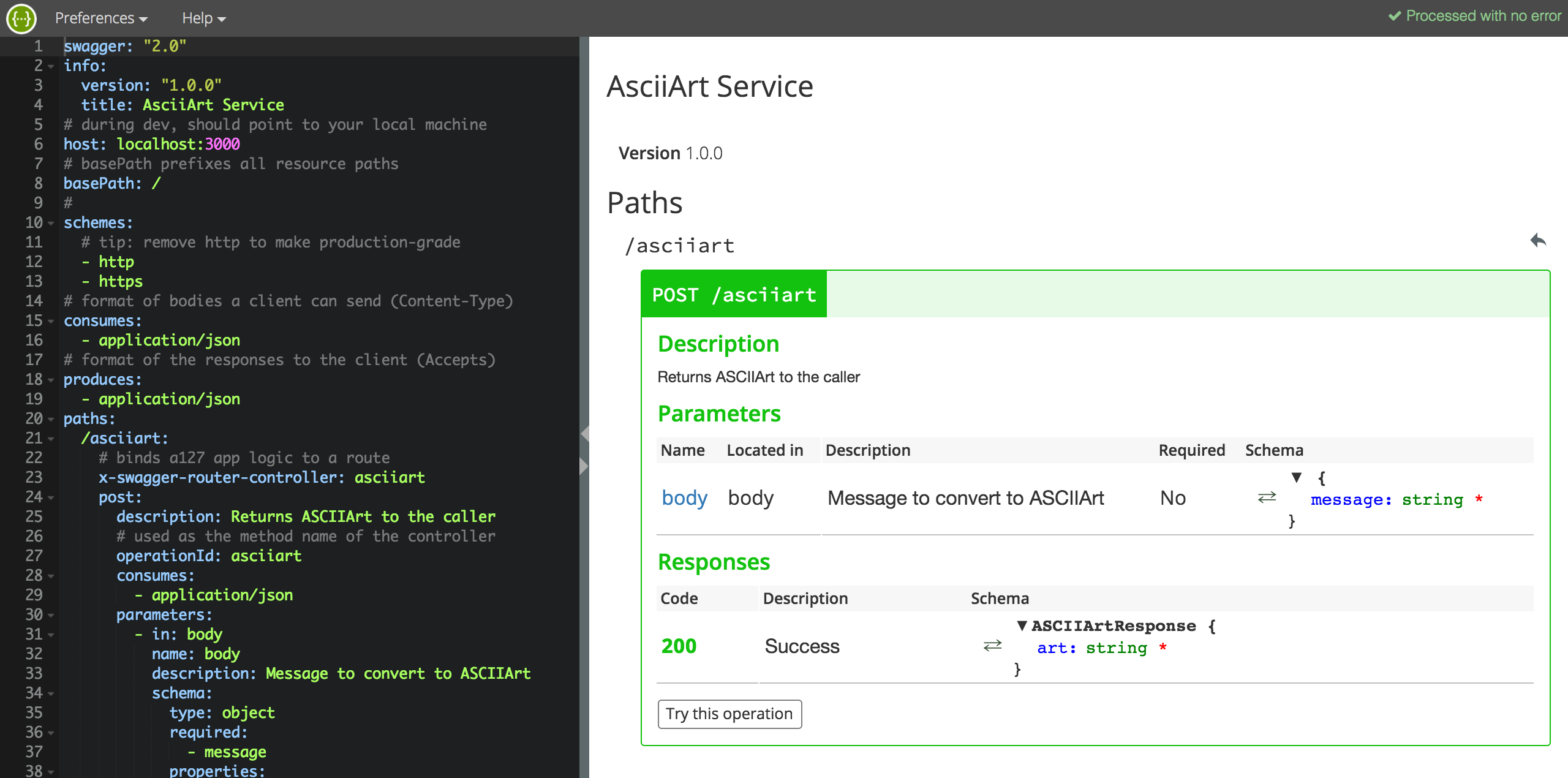

Swagger Editor, the interactive editor shown in the first screenshot of this blog.

Swagger Editor, the interactive editor shown in the first screenshot of this blog.