Boilerplate code is code that we repeat often with little or no variation. When it comes to writing field validations in Apex, especially within Apex Triggers there are a number of examples of this. Especially when it comes to checking if a field value has changed and/or querying for related records, which also requires observing good bulkifcathion best practices. This blog presents a small proof of concept framework aimed at reducing such logic made possible in Winter’21 with a small but critical enhancement to the Apex runtime that allows developers to dynamically add field errors. Additionally, for those practicing unit testing, the enhancement also allows test assert such errors without DML!

This is a very basic demonstration of the new addError and getErrors methods.

Opportunity opp = new Opportunity();

opp.addError('Description', 'Error Message!');

List<Database.Error> errors = opp.getErrors();

System.assertEquals(1, errors.size());

System.assertEquals('Error Message!', errors[0].getMessage());

System.assertEquals('Description', errors[0].getFields()[0]);

However in order to really appreciate the value these two features bring to frameworks let us first review a use case and the traditional approach to coding such validation logic. Our requirements are:

- When updating an Opportunity validate the Description and AccountId fields

- If the StageName field changes to “Closed Won” and the Description field has changed ensure it is not null.

- If the AccountId field changes ensure that the NumberOfEmployees field on the Account is not null

- Ensure code is bulkified and queries are only performed when needed.

The following code implements the above requirements, but does contain some boilerplate code.

// Classic style validation

switch on Trigger.operationType {

when AFTER_UPDATE {

// Prescan to bulkify querying for related Accounts

Set<Id> accountIds = new Set<Id>();

for (Opportunity opp : newMap.values()) {

Opportunity oldOpp = oldMap.get(opp.Id);

if(opp.AccountId != oldOpp.AccountId) { // AccountId changed?

accountIds.add(opp.AccountId);

}

}

// Query related Account records?

Map<Id, Account> associatedAccountsById = accountIds.size()==0 ?

new Map<Id, Account>() :

new Map<Id, Account>([select Id, NumberOfEmployees from Account where Id = :accountIds]);

// Validate

for (Opportunity opp : newMap.values()) {

Opportunity oldOpp = oldMap.get(opp.Id);

if(opp.StageName != oldOpp.StageName) { // Stage changed?

if(opp.StageName == 'Closed Won') { // Stage closed won?

if(opp.Description != oldOpp.Description) { // Description changed?

if(opp.Description == null) { // Description null?

opp.Description.addError('Description must be specified when Opportunity is closed');

}

}

}

}

if(opp.AccountId != oldOpp.AccountId) { // AccountId changed?

Account acct = associatedAccountsById.get(opp.AccountId);

if(acct!=null) { // Account queried?

if(acct.NumberOfEmployees==null) { // NumberOfEmployees null?

opp.AccountId.addError('Account does not have any employees');

}

}

}

}

}

}

Below is the same validation implemented using a framework built to reduce boilerplate code.

SObjectFieldValidator.build()

.when(TriggerOperation.AFTER_UPDATE)

.field(Opportunity.Description).hasChanged().isNull().addError('Description must be specified when Opportunity is closed')

.when(Opportunity.StageName).hasChanged().equals('Closed Won')

.field(Opportunity.AccountId).whenChanged().addError('Account does not have any employees')

.when(Account.NumberOfEmployees).isNull()

.validate(operation, oldMap, newMap);

The SObjectFieldValidator framework uses the Fluent style design to its API and as such allows the validator to be dynamically constructed with ease. Additionally configured instances of it can be passed around and extended by other code paths and modules with the validation itself to be performed in one pass. The framework also attempts some smarts to bulkify queries (in this case related Accounts) and only do so if the target field or related fields have been modified – thus ensuring optimal processing time. The test code for either approaches can of course be written in the usual way as shown below.

// Given

Account relatedAccount = new Account(Name = 'Test', NumberOfEmployees = null);

insert relatedAccount;

Opportunity opp = new Opportunity(Name = 'Test', CloseDate = Date.today(), StageName = 'Prospecting', Description = 'X', AccountId = null);

insert opp;

opp.StageName = 'Closed Won';

opp.Description = null;

opp.AccountId = relatedAccount.Id;

// When

Database.SaveResult saveResult = Database.update(opp, false);

// Then

List<Database.Error> errors = saveResult.getErrors();

System.assertEquals(2, errors.size());

System.assertEquals('Description', errors[0].getFields()[0]);

System.assertEquals('Description must be specified when Opportunity is closed', errors[0].getMessage());

System.assertEquals('AccountId', errors[1].getFields()[0]);

System.assertEquals('Account does not have any employees', errors[1].getMessage());

While you do still need code coverage for your Apex Trigger logic, those practicing unit testing may prefer to leverage the ability to avoid DML in order to assert more varied validation scenarios. The following code is entirely free of any SOQL and DML statements and thus better for test performance. It leverages the ability to inject related records rather than allowing the framework to query them on demand. The SObjectFieldValidator instance is constructed and configured in a separate class for reuse.

// Given

Account relatedAccount =

new Account(Id = TEST_ACCOUNT_ID, Name = 'Test', NumberOfEmployees = null);

Map<Id, SObject> oldMap =

new Map<Id, SObject> { TEST_OPPORTUNIT_ID =>

new Opportunity(Id = TEST_OPPORTUNIT_ID, StageName = 'Prospecting', Description = 'X', AccountId = null)};

Map<Id, SObject> newMap =

new Map<Id, SObject> { TEST_OPPORTUNIT_ID =>

new Opportunity(Id = TEST_OPPORTUNIT_ID, StageName = 'Closed Won', Description = null, AccountId = TEST_ACCOUNT_ID)};

Map<SObjectField, Map<Id, SObject>> relatedRecords =

new Map<SObjectField, Map<Id, SObject>> {

Opportunity.AccountId =>

new Map<Id, SObject>(new List<Account> { relatedAccount })};

// When

OpportunityTriggerHandler.getValidator()

.validate(TriggerOperation.AFTER_UPDATE, oldMap, newMap, relatedRecords);

// Then

List<Database.Error> errors = newMap.get(TEST_OPPORTUNIT_ID).getErrors();

System.assertEquals(2, errors.size());

System.assertEquals('AccountId', errors[0].getFields()[0]);

System.assertEquals('Account does not have any employees', errors[0].getMessage());

System.assertEquals('Description', errors[1].getFields()[0]);

System.assertEquals('Description must be specified when Opportunity is closed', errors[1].getMessage());

Finally it is worth noting that such a framework can of course only get you so far and there will be scenarios where you need to be more rich in your criteria. This is something that could be explored further through the SObjectFieldValidator.FieldValidationCondition base type that allows coded field validations to be added via the condition method. The framework is pretty basic as I really do not have a great deal of time these days to build it out more fully, so totally invite anyone interested to take it further.

Enjoy!

I’m proud to announce the third edition of my book has now been released. Back in March this year I took the plunge start updates to many key areas and add two brand new chapters. Between the 2 years and 8 months since the last edition there has been several platform releases and an increasing number of new features and innovations that made this the biggest update ever! This edition also embraces the platforms rebranding to Lightning, hence the book is now entitled Salesforce Lightning Platform Enterprise Architecture.

I’m proud to announce the third edition of my book has now been released. Back in March this year I took the plunge start updates to many key areas and add two brand new chapters. Between the 2 years and 8 months since the last edition there has been several platform releases and an increasing number of new features and innovations that made this the biggest update ever! This edition also embraces the platforms rebranding to Lightning, hence the book is now entitled Salesforce Lightning Platform Enterprise Architecture.

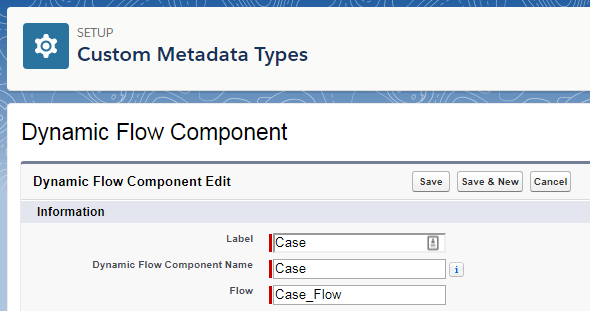

The Dynamic Flow Component (DFC) allows you to further extend your use of auto launch and screen

The Dynamic Flow Component (DFC) allows you to further extend your use of auto launch and screen