AI services are becoming more and more accessible to developers than ever before. Salesforce acquired Metamind last year and made some big announcements at Dreamforce 2016. Like many developers, i was keen to find out about its API. The answer at the time was “check back with us next year!”.

With Spring’17 that question has been answered. At least thus far as regards to image recognition, with the availability of Salesforce Einstein Predictive Vision Service (Pilot). The pilot is open to the public and is free to signup.

With Spring’17 that question has been answered. At least thus far as regards to image recognition, with the availability of Salesforce Einstein Predictive Vision Service (Pilot). The pilot is open to the public and is free to signup.

True AI consists of recognition, be that visual or spoken, performing actions and the final most critical peace, learning. This blog explores the spoken and visual recognition peace further, with the added help of Flow for performing practically any action you can envision!

You may recall a blog from last year relating to integrating Salesforce with Amazon Echo. To explore the new Einstein API, I decided to leverage that work further. In order to trigger recognition of my pictures from Alexa. Also the Salesforce Flow usage enabled easy extensibility via custom Apex Actions. Thus the Einstein Apex Action was born! After a small bit of code and some configuration i had a working voice activated image recognition demo up and running.

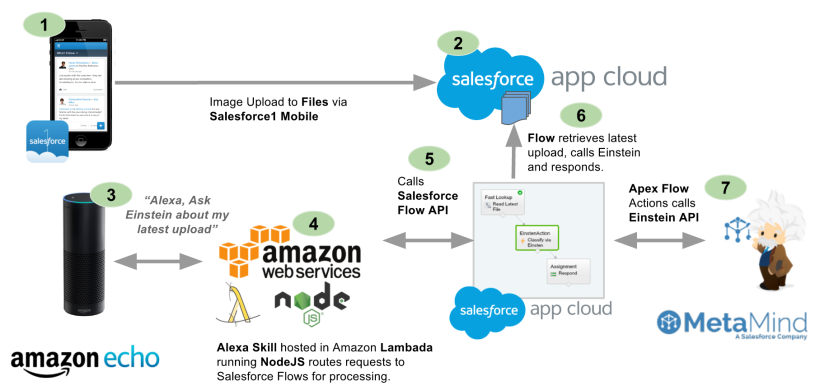

The following diagram breaks down what just happened in the video above. Followed by a deeper walk through of the Predictive Vision Service and how to call it.

- Using Salesforce1 Mobile app I uploaded an image using the Files feature.

- Salesforce stores this in the ContentVersion object for later querying (step 6).

- Using the Alexa skill, called Einstein, i was able to “Ask Einstein about my photo”

- This NodeJS skill runs on Amazon and simply routes requests to Salesforce Flow

- Spoken terms are passed through to a named Flow via the Flow API.

- The Flow is simple in this case, it queries the ContentVersion for the latest upload.

- The Flow then calls the Einstein Apex Action which in turn calls the Einstein REST API via Apex (more on this later). Finally a Flow assignment takes the resulting prediction of what the images is actually of, and uses it to build a spoken response.

Standard Example: The above example is exposing the Einstein API in an Apex Action, this is purely to integrate with the Amazon Echo use case. The pilot documentation walks you through an standalone Apex and Visualforce example to get you started.

How does theEinstein Predictive Vision Service API work?

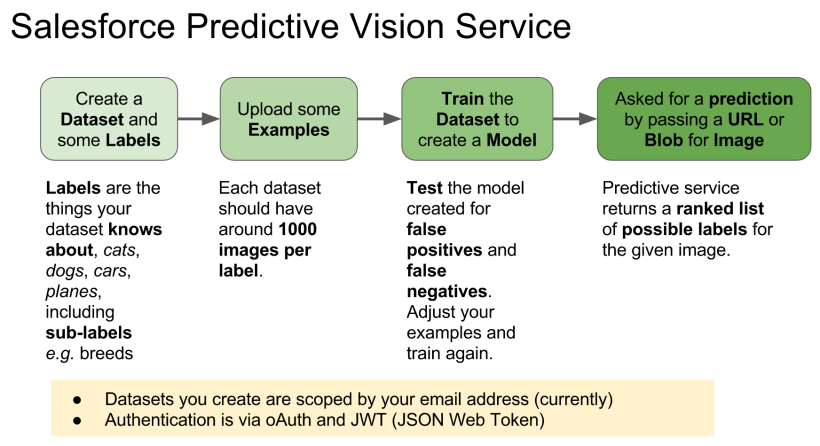

The service introduces a few new terms to get your head round. Firstly a dataset is a named container for the types of images (labels) you want to recognise. The demo above uses a predefined dataset and model. A model is the output from the process of taking examples of each of your data sets labels and processing them (training). Initiating this process is pretty easy, you just make a REST API call with your dataset ID. All the recognition magic is behind the scenes, you just poll for when its done. All you have to do is test the model with other images. The service returns ranked predictions (using the datasets labels) on what it thinks your picture is of. When i ran the pictures above of my family dogs, for the first time i was pretty impressed that it detected the breeds.

The service introduces a few new terms to get your head round. Firstly a dataset is a named container for the types of images (labels) you want to recognise. The demo above uses a predefined dataset and model. A model is the output from the process of taking examples of each of your data sets labels and processing them (training). Initiating this process is pretty easy, you just make a REST API call with your dataset ID. All the recognition magic is behind the scenes, you just poll for when its done. All you have to do is test the model with other images. The service returns ranked predictions (using the datasets labels) on what it thinks your picture is of. When i ran the pictures above of my family dogs, for the first time i was pretty impressed that it detected the breeds.

While quite fiddly at times, it is also well worth the walking through how to setup your own image datasets and training to get a hands on example of the above.

How do i call the Einstein API from Apex?

Salesforce saved me the trouble of wrapping the REST API in Apex and have started an Apex wrapper here in this GitHub repo. When you signup you get private key file you have to upload into Salesforce to authenticate the calls. Currently the private key file the pilot gives you seems to be scoped by your org users associated email address.

public with sharing class EinsteinAction {

public class Prediction {

@InvocableVariable

public String label;

@InvocableVariable

public Double probability;

}

@InvocableMethod(label='Classify the given files' description='Calls the Einsten API to classify the given ContentVersion files.')

public static List<EinsteinAction.Prediction> classifyFiles(List<ID> contentVersionIds) {

String access_token = new VisionController().getAccessToken();

ContentVersion content = [SELECT Title,VersionData FROM ContentVersion where Id in :contentVersionIds LIMIT 1];

List<EinsteinAction.Prediction> predictions = new List<EinsteinAction.Prediction>();

for(Vision.Prediction vp : Vision.predictBlob(content.VersionData, access_token, 'GeneralImageClassifier')) {

EinsteinAction.Prediction p = new EinsteinAction.Prediction();

p.label = vp.label;

p.probability = vp.probability;

predictions.add(p);

break; // Just take the most probable

}

return predictions;

}

}

NOTE: The above method is only handling the first file passed in the parameter list, the minimum needed for this demo. To bulkify you can remove the limit in the SOQL and ideally put the file ID back in the response. It might also be useful to expose the other predictions and not just the first one.

The VisionController and Vision Apex classes from the GitHub repo are used in the above code. It looks like the repo is still very much WIP so i would expect the API to change a bit. They also assume that you have followed the standalone example tutorial here.

Summary

This initial API has made it pretty easy to access a key part of AI with what is essentially only a handful of simple REST API calls. I’m looking forward to seeing where this goes and where Salesforce goes next with future AI services.

February 6, 2017 at 7:14 am

Andrew, awesome post as always. Thank you for leading us to these newer & exciting features. You are true guru.

February 16, 2017 at 1:56 am

Thanks your most welcome!

February 7, 2017 at 5:54 am

Nice work, Andy. You’ll be delighted when I publish my fully-fledged PV API on Friday at London’s Calling… 😉

February 16, 2017 at 1:53 am

Thanks! Yeah really wish I could have attended. Was it recorded? Have you blogged on it?

February 9, 2017 at 4:19 am

Amazon Echo system was really nice inventions, its works good. This show the important of sales force cloud and in this APEX is also used. Thank You.

March 15, 2017 at 7:21 am

Did you ever get the Model training from a zip file? It almost seemed like the zip file needed to be hosted on a publicly accessible site.

I pulled all the Apex classes, Visualforce pages, and Remote Site settings needed to get the basic functionality together into a single unmanaged package. Just add your own predictive_services.pem into a file and go.

http://www.fishofprey.com/2017/02/visualforce-quick-start-with-salesforce.html

March 15, 2017 at 2:25 pm

Yeah you need to use some command line scripts to upload the contents of the zip.

March 15, 2017 at 5:00 pm

Or use the createDatasetFromZipFileAsync method from https://github.com/muenzpraeger/salesforce-einstein-vision-java/blob/master/src/main/java/com/winkelmeyer/salesforce/einsteinvision/PredictionService.java

We added recently a call to upload a full zip asynchron.

https://metamind.readme.io/docs/create-a-dataset-zip-async

😉

April 17, 2017 at 4:15 pm

That’s really cool!

Do you know of a way to get the Echo to read a google spreadsheet?

April 17, 2017 at 4:57 pm

Yep sure a few ways to cut that, apis can be used direct from node app or from Apex via flow

April 21, 2017 at 12:19 pm

This is really awesome.

May 16, 2017 at 5:15 am

Is it possible to get the image name with probability and Label?

May 18, 2017 at 6:46 pm

Image name for what?

May 23, 2017 at 5:20 pm

Hi Andrew,

I have tried creating the modelID but training status is getting failed(Several times).

Error Message: failureMsg : java.lang.RuntimeException: Training failed to complete.

Have trained several datasets model and used them in predictive vision service.Just wondering is there any limitation on model creation under one user. Even i tried using other predictiveservice key to generate new token but still same failure message coming. Can you please assist and guide us to discuss this issue.

Regards

Pravin

May 23, 2017 at 5:26 pm

{“datasetId”:1003289,”datasetVersionId”:1417,”name”:”Diper and Wipes Model”,”status”:”FAILED”,”progress”:0.17,”createdAt”:”2017-05-23T12:59:35.000+0000″,”updatedAt”:”2017-05-23T12:59:55.000+0000″,”failureMsg”:”java.lang.RuntimeException: Training failed to complete”,”learningRate”:0.001,”epochs”:3,”object”:”training”,”modelId”:”I5EUGVFFCM5FHOPJNMHTOAQRNI”,”trainParams”:null,”trainStats”:{“labels”:2,”examples”:213,”totalTime”:null,”trainingTime”:null,”lastEpochDone”:0,”modelSaveTime”:null,”testSplitSize”:22,”trainSplitSize”:188,”datasetLoadTime”:”00:00:01:282″},”modelType”:”image”}

Response getting on train command execution.

May 23, 2017 at 10:18 pm

Ah ok that answers my earlier question then! 👍🏻 So this looks like an internal bug and needs raising with Salesforce via a case. You might also try posting on the GitHub repo for the API wrappers, as the devs on that might be able to help with debug. Let me know how you get on, sorry I cannot be of much help in this case.

May 23, 2017 at 10:16 pm

I am not aware of this limit. I do wonder if more detailed error info is not being shown by java. Can you repo via curl? If so this will also give you a good basis for a support case.

May 24, 2017 at 6:43 am

Thanks for the info.

It worked today may be some server issue.

Regards

Pravin

May 29, 2017 at 2:59 pm

Great article, thank you, Andrew! We have also made a research on different Image Recognition APIs, you may find it useful: https://opsway.com/blog/image-recognition-research-choice-api

May 29, 2017 at 5:55 pm

Very useful thank you for sharing!

Pingback: Highlights from TrailheaDX 2017 | Andy in the Cloud

July 7, 2017 at 9:55 am

I would like to see comparison to https://vize.ai

July 8, 2017 at 1:54 am

Thanks for the comment, will keep it mind

Pingback: Einstein Vision – Real Estate App – ForceOlympus